The tutorial will assume you are using a Linux OS.

WHERE DOES PIP INSTALL PYSPARK HOW TO

In this brief tutorial, we’ll go over step-by-step how to set up PySpark and all its dependencies on your system, and then how to integrate it with Jupyter notebook. However, the PySpark+Jupyter combo needs a little bit more love. Most users with a Python background take this workflow as granted for all popular Python packages.

However, unlike most Python libraries, starting with PySpark is not as straightforward as pip install . It will be much easier to start working with real-life large clusters if you have internalized these concepts beforehand! You can also easily interface with SparkSQL and MLlib for database manipulation and machine learning. You then bring the compute engine close to them so that the whole operation is parallelized, fault-tolerant and scalable.īy working with PySpark and Jupyter notebook, you can learn all these concepts without spending anything. You are distributing (and replicating) your large dataset in small fixed chunks over many nodes. In fact, Spark is versatile enough to work with other file systems than Hadoop - like Amazon S3 or Databricks (DBFS).īut the idea is always the same. This presents new concepts like nodes, lazy evaluation, and the transformation-action (or ‘map and reduce’) paradigm of programming. Instead, it is a framework working on top of HDFS. Remember, Spark is not a new programming language that you have to learn. You could also run one on an Amazon EC2 if you want more storage and memory. However, if you are proficient in Python/Jupyter and machine learning tasks, it makes perfect sense to start by spinning up a single cluster on your local machine.

WHERE DOES PIP INSTALL PYSPARK FREE

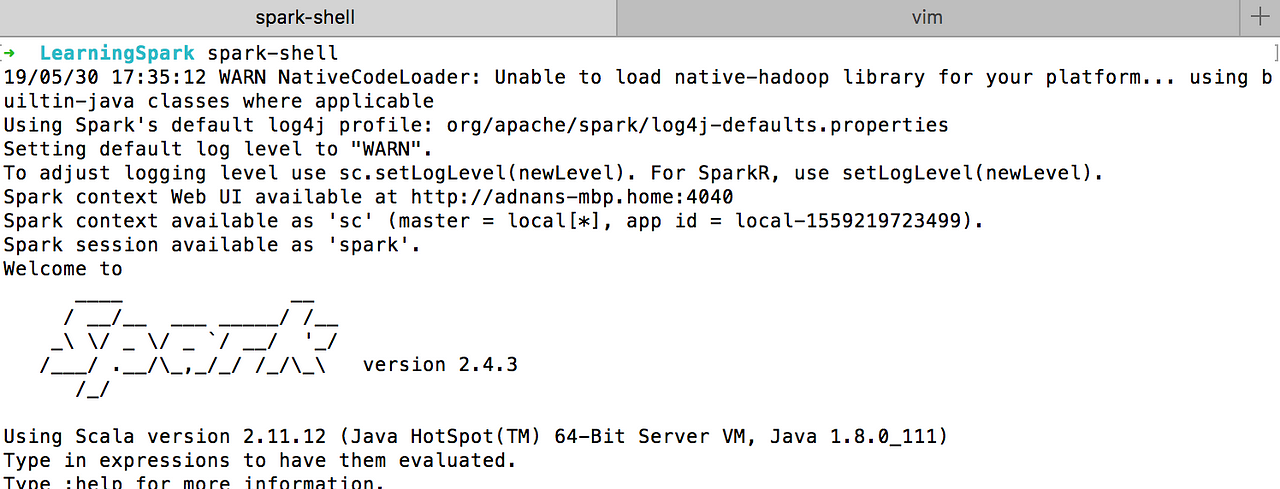

The above options cost money just to even start learning (Amazon EMR is not included in the one-year Free Tier program unlike EC2 or S3 instances). Databricks cluster(paid version, the free community version is rather limited in storage and clustering option).Amazon Elastic MapReduce (EMR) cluster with S3 storage.Unfortunately, to learn and practice that, you have to spend money. Now, the promise of a Big Data framework like Spark is only truly realized when it is run on a cluster with a large number of nodes. This allows Python programmers to interface with the Spark framework - letting you manipulate data at scale and work with objects over a distributed file system. However, for most beginners, Scala is not a great first language to learn when venturing into the world of data science.įortunately, Spark provides a wonderful Python API called PySpark. Spark is implemented on Hadoop/HDFS and written mostly in Scala, a functional programming language which runs on the JVM. It integrates beautifully with the world of machine learning and graph analytics through supplementary packages like MLlib and GraphX.It offers robust, distributed, fault-tolerant data objects (called RDDs).Spark is fast (up to 100x faster than traditional Hadoop MapReduce) due to in-memory operation.It realizes the potential of bringing together both Big Data and machine learning. Using Python version 3.5.Apache Spark is one of the hottest frameworks in data science. To adjust logging level use sc.setLogLevel(newLevel).

using builtin-java classes where applicable 14:02:39 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform. Type "help", "copyright", "credits" or "license" for more information. $ PYSPARK_PYTHON=python3 SPARK_HOME=~/.local/lib/python3.5/site-packages/pyspark pyspark Successfully installed py4j-0.10.6 pyspark-2.3.0 Installing collected packages: py4j, pyspark Running setup.py bdist_wheel for pyspark. But when I set this manually, pyspark works like a charm (without downloading any additional packages). Pip just doesn't set appropriate SPARK_HOME. I just faced the same issue, but it turned out that pip install pyspark downloads spark distirbution that works well in local mode.

0 kommentar(er)

0 kommentar(er)